A Leap Forward in AI

In December 2023, Google DeepMind introduced Gemini, a breakthrough in artificial intelligence that marks the next generation of large language models (LLMs). Rather than being limited to text, Gemini is built to handle text, images, audio, code and video—making it truly multimodal.

This article explores what Gemini is, how it works, how it’s being used today, what benefits it offers, where it still struggles, and what the future might hold.

1. What Is Google Gemini?

At its core, Google Gemini is a family of large-scale AI models designed to understand and generate across multiple modalities. According to Google:

- It can process and generate text, images, audio, video and code.

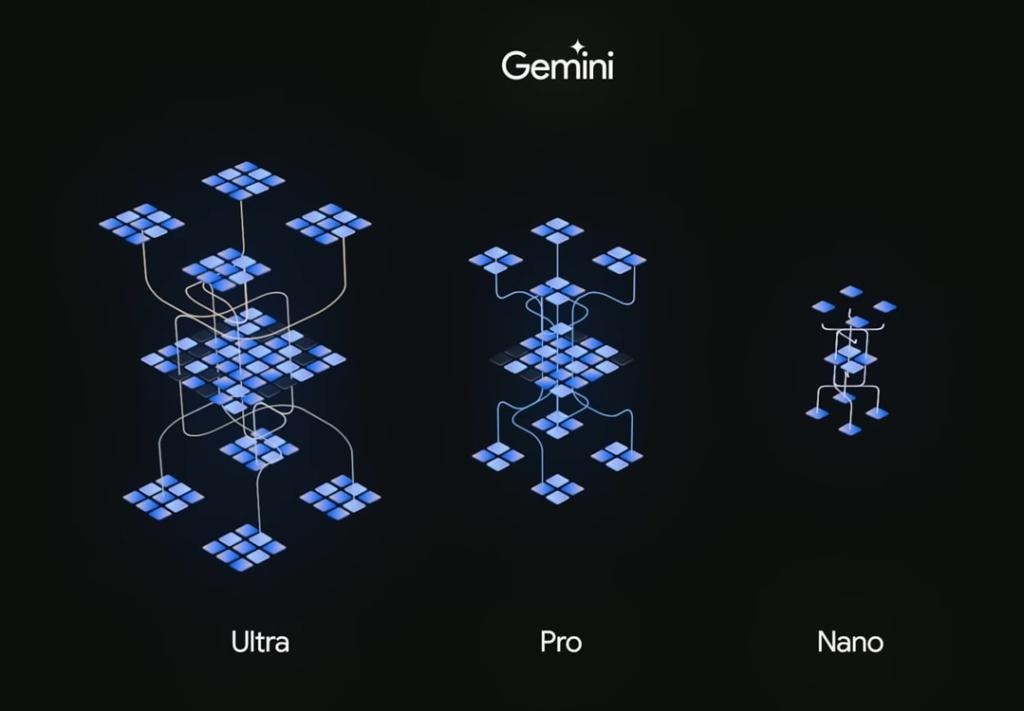

- It’s built in three core versions: Gemini Ultra, Gemini Pro, and Gemini Nano, optimized respectively for high-end tasks, broad range tasks, and on-device efficiency.

- It replaces earlier Google models like PaLM 2 and LaMDA and integrates into the wider Google ecosystem (Search, Assistant, Workspace).

In short, Google sees Gemini as its flagship AI model—a foundation for everything from advanced research tasks to everyday digital assistant features.

2. How Does Gemini Work?

2.1 Multimodal Architecture

Unlike many earlier AI models designed for text only, Gemini natively handles mixed types of input. That means you can feed it a diagram, voice clip, text prompt and video frame all in the same session—and it will reason across them. For example: you might ask it to analyze a screenshot and then ask follow-up questions in text, or ask it to generate code based on a photo of a UI wireframe.

2.2 Training & Infrastructure

Google trained Gemini on massive compute: TPUs v4/v5e and large curated datasets spanning text, image, audio and video.

Performance benchmarks published by Google show Gemini Ultra achieving state-of-the-art scores on tasks like MMLU (Massive Multitask Language Understanding) and other reasoning benchmarks.

2.3 Context Window & Reasoning

Gemini supports long-context inputs (such as large documents or multi-step reasoning). It’s designed not just to respond, but to think: to map out reasoning paths, combine multiple modalities and arrive at deeper answers rather than surface responses.

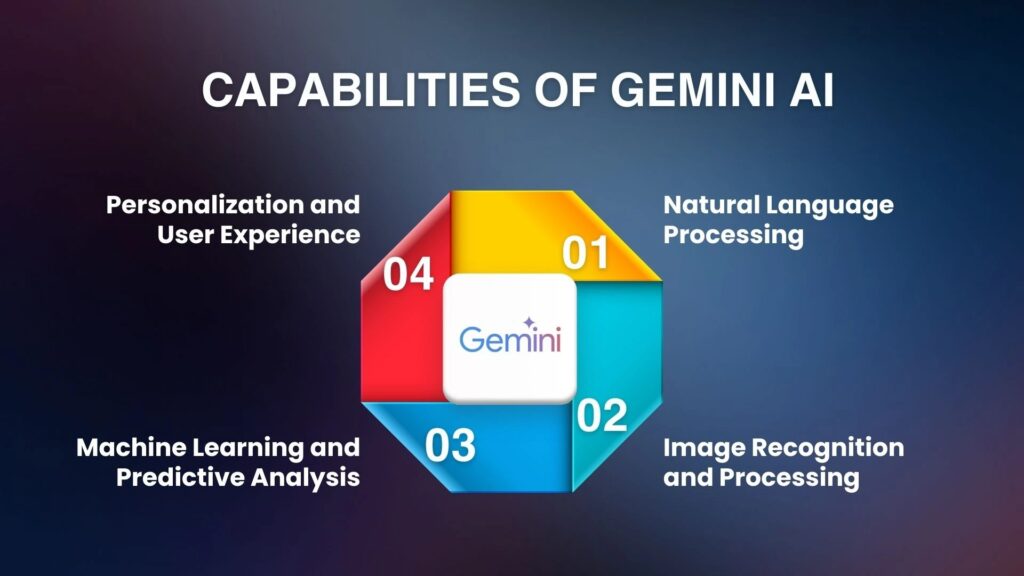

3. Key Features That Set Gemini Apart

Here are some of the standout features of Gemini, as highlighted by Google and technology analysts:

- Native multimodality: Text + image + audio + code support in one model.

- Deep reasoning: Ability to solve complex tasks across domains—math, science, law, code.

- Scalable design: Runs from powerful cloud infra to mobile devices (Gemini Nano) for on-device AI.

- Ecosystem integration: Works in Google Search, Workspace (Docs, Gmail), Android devices.

- Developer accessibility: Available via API / Google AI Studio for building custom apps and workflows.

4. Real-World Use Cases

Let’s look at how Gemini is being used across industries and everyday life.

4.1 Productivity & Personal Assistant

For millions of users, Gemini acts like a supercharged assistant: drafting emails, summarizing documents, generating presentation slides, or helping brainstorm ideas. On Android and in Google Workspace, it’s already embedded.

4.2 Content Creation & Design

Gemini’s ability to understand text + image means creators can ask: “Design a logo based on this photo” or “Generate a storybook using this drawing”. It opens new possibilities for rich media content.

4.3 Coding & Development

As per GeeksforGeeks, Gemini supports code generation, debugging, explanation of algorithms and handling mixed prompts (text + screenshot).

Developers can feed UI wireframes or code snippets and get suggestions or improved code.

4.4 Enterprise & Analytics

Companies can use Gemini via APIs for customer support automation, data analysis, legal document review, research summarization and more—thanks to its reasoning ability across long documents and mixed media inputs.

4.5 Healthcare & Science (Emerging)

Although still research-tier, a variation “Med-Gemini” has shown strong results in medical benchmarks, analyzing x-rays, CT scans, genetic data and summarizing medical texts.

This suggests future deployment in diagnostics, research summarization and personalized medicine.

5. Benefits of Using Gemini

Here’s how Gemini brings value across the board:

- Efficiency: Complex tasks become faster—drafting, coding, summarizing take fewer steps.

- Versatility: One model handles many modalities, so organizations don’t need a separate model for image + text + code tasks.

- Integration-ready: Because it’s built into Google’s ecosystem, it’s easier to adopt for businesses and individual users.

- Creative augmentation: It enables creativity—designers, writers, developers can leverage it for inspiration and execution.

- Scalability: From light mobile tasks to heavy cloud workloads, Gemini covers a wide range of hardware and workload types.

6. Limitations & Considerations

No model is perfect—Gemini also has its caveats.

- Accuracy & Bias: As with all AI models, there’s risk of incorrect outputs or embedded bias. Google even paused image generation of people using Gemini because of inaccuracies.

- Privacy and Data Use: Integration into messaging apps and devices raises privacy questions—users must manage data-sharing settings carefully.

- Resource Requirements: While Gemini Nano is efficient, the full Ultra model still needs major infrastructure.

- Cost & Access: Advanced features or enterprise APIs may require subscription or fees; not every user gets full capability for free.

- Over-hype Risk: Some users report inconsistent experiences when comparing Gemini to rivals.

7. How Gemini Stacks Up Against Competitors

When comparing Gemini with other major AI models (such as GPT‑4 or Claude 3):

- Gemini’s multimodality puts it ahead in versatility (text + image + audio + video) compared to many which handle mostly text + image.

- Performance benchmarks claim Gemini Ultra beats human experts on some exams (MMLU) and sets new state-of-the-art in multimodal tasks.

- Integration into Google’s ecosystem gives it a unique advantage in accessibility and deployment (Search, Workspace, Android).

- On the downside: Some users argue that in day-to-day tasks, the difference is less visible and user experience varies.

In other words—Gemini shines in complex and multimodal scenarios but may not always feel drastically different for simpler tasks.

8. Future Directions & What to Watch

The roadmap for Gemini and similar models includes several key trends:

- Longer context windows: Handling millions of tokens in one session (i.e., entire books, large databases) is forthcoming.

- Physical embodiment: Research such as Gemini Robotics suggests models controlling robots, combining vision + language + action.

- Hyper-personalization: On-device models (Nano) will offer more private, personalized AI assistants with fewer cloud dependencies.

- Domain specialization: Models like Med-Gemini suggest specialization in fields like medicine, law or scientific research.

- Regulation & ethics: As capability grows, regulation around AI safety, bias, privacy and accountability will become more critical.

- Ubiquitous deployment: From smartphones to IoT devices to vehicles—Gemini-type models may become embedded in everyday devices seamlessly.

9. Practical Tips for Using Gemini

Here are some suggestions if you’re planning to work with Gemini—whether as an individual or organization:

- Define the modality clearly: If you want a mixed input (image + text + voice), set that context up properly.

- Leverage prompt context: Because Gemini handles longer contexts, provide background, goals, examples up front.

- Review outputs critically: Don’t treat it as flawless—verify especially when legal, scientific or factual statements are involved.

- Protect your privacy: Review permissions and data sharing especially when integrating with mobile apps or messaging.

- Explore integration: If you’re a business, check the API and Google AI Studio documentation to embed Gemini into workflows.

- Stay updated: With fast-moving releases (2.5, Flash, Nano) features evolve—what you use today may change quickly.

10. Conclusion: Navigating the Age of Multimodal AI

Google Gemini represents a significant leap in how artificial intelligence interacts with the world—not just processing text, but interpreting images, audio, code, and video in one unified model. Its strength lies in versatility, integration, and reasoning across modalities.

For users, this means smarter assistants, faster workflows and new creative possibilities. For developers and enterprises, it provides a platform to build intelligent applications that span domains and data types. But with great power comes great responsibility: ethical safeguards, privacy controls and accuracy verification become indispensable.

As the AI landscape continues to evolve, Gemini stands as a landmark of where we are—and hints at where we’re headed. From on-device assistants to AI robots, the future is hybrid, multimodal and deeply integrated into everyday life.

In embracing Gemini, we embrace a new era of AI—one where the boundaries between human-machine interaction blur, and intelligence becomes more fluid, more intuitive and more capable. Whether you’re writing, coding, designing or just seeking smarter digital help, Gemini is a major milestone. The question now: how will you use it?